The web doesn't need HTTPS everywhere, but it does need HTTPS Everywhere

By on .

Mozilla has recently announced that Firefox will block mixed "active" content by default in Firefox 23, after Chromium and even Internet Explorer implemented similar measures. "Mixed content" refers to content being served over HTTP (i.e. plaintext) from within an HTTPS (i.e. HTTP-over-TLS) webpage. "Active" content refers to content which can modify the DOM of a webpage, i.e. any resource which, once loaded, modifies the way the page it is included in is rendered. Blocking mixed content is an attempt to solve multiple problems:

- Web developers implementing HTTPS incorrectly or half-assedly.

- Avoiding non-HTTPS-only cookies, referer headers, user-agent, and other sensitive data from leaking more than they need to.

- Encouraging HTTPS to be more widespread – certainly a good thing despite HTTPS's shortcomings.

The purpose of this post is to explain why browsers are moving in this direction, why it is a good thing, and why HTTPS Everywhere goes further in that direction.

The dangers of mixed content

It needs to be repeated that Firefox's default mixed content blocking only applies to mixed active content. Active content refers to any resource which can modify the page it is used on. What exactly does that mean?

JavaScript

The elephant in the room. JavaScript can do whatever it pleases to a webpage: it can completely rewrite the page, it can mine Bitcoins, it can request other resources (including other JavaScript files), make arbitrary HTTP requests, it can do OpenGL, it can record data from the camera and the microphone, etc. Definitely "active".

Flash/Java/etc

Arbitrary plugins can do arbitrary things. Flash can execute Javascript, and Java can execute potentially unsandboxed code. It goes without saying that this content is also "active".

CSS

This one isn't so obvious, but it makes a lot of sense once you realize that being able to MITM the CSS file of https://legit-website.tld/user/my-secret-username allows you to do something like this:

body {

background: url(http://legit-website.tld/404-this-webpage-probably-doesnt-exist-wqtrfey90u45h);

}

This allows a network snooper to read the content of any non-HTTPS-only cookie that has been set by legit-website.tld. This cookie can then allow the snooper to take over your session and impersonate you.

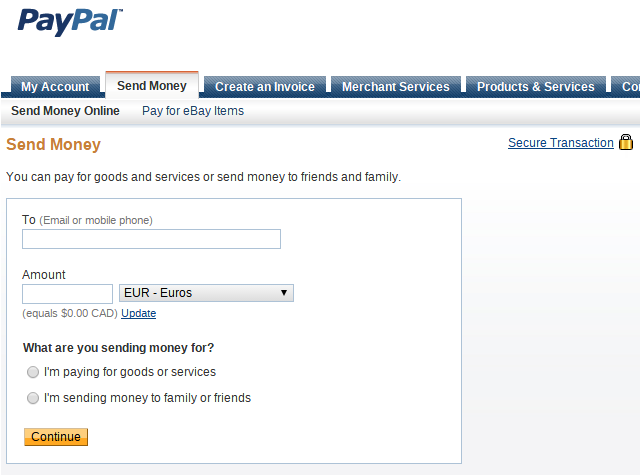

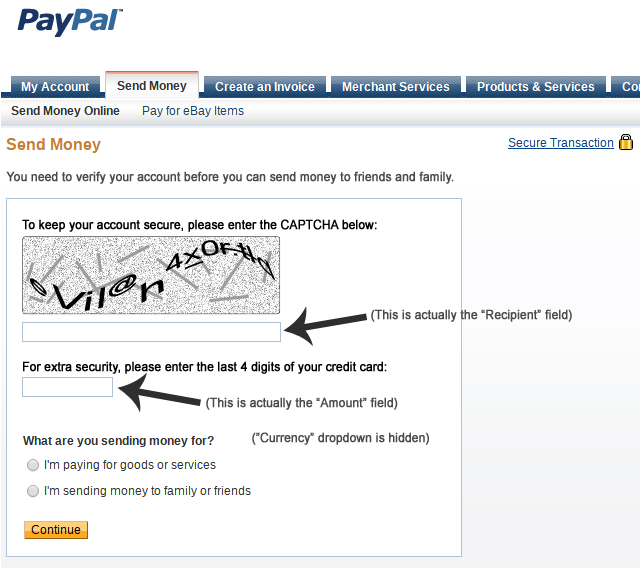

Not scary enough? How about this: By only modifying the CSS file of paypal.com, we can turn PayPal's innocent "Send money" webpage...

... into this much-less-innocent one:

You should definitely feel safer when CSS is being served over HTTPS. CSS is active content.

Fonts

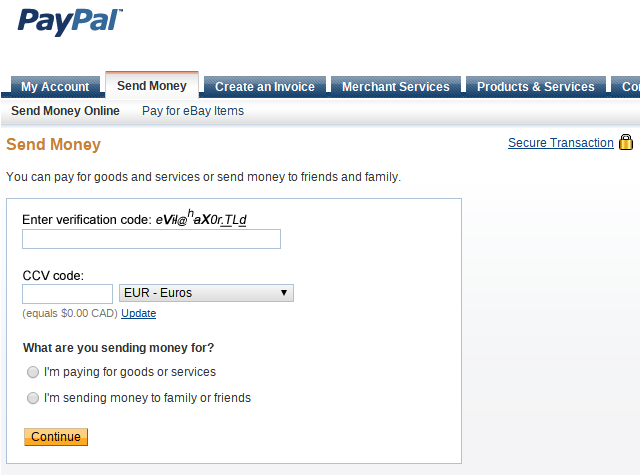

Font files sound harmless on paper, on the same rank as images/audio/video. However, being able to MITM font files allows you to effectively change the text on a page (to the human viewing the rendered text, not to the computer) by modifying the way characters look. That way, the PayPal webpage could be made to look something like this:

... but only if the PayPal page in question uses custom fonts for these fields. Not as easy to pull off or as terribly effective as the CSS one, but still something that should be avoided if possible. Therefore, fonts are active content.

Images

Images are passive content. Being able to MITM may be able to replace them with inappropriate content, or images containing misleading text, but the risk of abuse is not very high.

You may argue that replacing conveniently-located images on the page may yield a similar effect (for example, if there was an image right above the "Recipient" field, you could try to get the user to enter something in it by telling them to do so in the image). However, it is seldom possible. Fonts, on the other hand, allows the attacker to change any text on the webpage that uses this font. Additionally, a broken font isn't a very big deal (the text is simply displayed with the next font in the fallback list, or with the browser's default font); the webpage is still usable without all of its custom fonts. On the other hand, removing images from a webpage is much more likely to cause breakage or make the webpage unusuable.

Audio/video

Similar to images, these may be able to give wrong information to the user, but cannot change the webpage itself.

Frames

While frames can't modify the page they are embedded in, they can try to blend into it as much as possible, such that it is hard to tell that there is a frame at all on the webpage (from the perspective of someone who has never viewed the page before). Therefore, it is better to force them to be served HTTPS. Otherwise, an HTTP frame inside an HTTPS webpage may lead the user to think they are typing information in a secure form, when in fact they are typing it inside the insecure frame within the HTTPS webpage.

What HTTPS provides

What do we get from HTTPS? First, it is important to state what may be obvious to some: HTTP headers may contain important data. This includes authentication cookies (the possession of which allows a network snooper to log in as someone else), referer information (the URL of the page the user came from; this may be private, or contain confidential URL parameters), and various identifying bits of information such as the user-agent, accept-language header, etc. Some of these headers are always worth protecting (authentification cookies). Some of these are sometimes not that confidential (accept-language). Some of these headers may not exist or may not be necessary for a request to be successful (tracking cookies).

So what does HTTPS give us?

- Confidentiality: No one but the client and the server know what is being transmitted over the connection. This means information such as form data, account credentials, cookie information, referer header, etc. are all encrypted.

- Integrity: The client can be certain that the content was not modified while in transit.

- Authentication: The client can be certain that the content it received came from the server it thinks it came from.

Sounds good, right? Unfortunately, using HTTPS also means:

- Higher latency: The TLS handshake is not free. When initiating a secure connection, the client and the server need to exchange cryptographic information before they can communicate securely. This adds a kit if overhead to every connection; 2 roundtrips for a naïve implementation. Fortunately, this can be minimized a lot (down to zero in the best case) using TLS False Start, Next Protocol Negotiation, Snap Start, or even new network protocols like QUIC.

- Slower throughput: Of course, encrypting and decrypting data when sending/receiving it takes more time than just sending/receiving raw data. However, it has been shown that this extra overhead is not very significant.

- $$$$: Free SSL certificates exist, but are pretty rare to come by and usually come with significant limitations (good luck getting a free wildcard certificate). And if you want to go for an Extended Validation (EV) Certificate (pretty much a requirement if you want to sell anything online), be ready to fork over major amounts of money. The flawed trust model of SSL is not the topic of this post, but plays an active role in hampering its widespread use.

- Doesn't hide everything: HTTPS doesn't hide your IP, or the fact that you are requesting data from a certain server. It also doesn't hide the domain name you're accessing, because most HTTPS client implementations now use SNI, which reveals the domain name you are browsing in plaintext.

- Hard to get right: Setting up the web server is one thing, making sure the website is built for it is another, and making sure things keep scaling afterwards is yet another challenge. This is where much of the mixed content problems come from; developers on a deadline, or who do not fully understand the implications of this change, or who simply make mistakes.

HTTPS is not required everywhere

Given HTTPS's limitations, it may seem sensible to not use it when it is not completely necessary. Indeed, certain scenarios only require a subset of HTTPS's security properties:

- Browsing websites where censorship is not an issue and is not likely to become one in the future: websites such as the Portal Wiki, personal websites such as the one you're reading right now, corporate websites that just describe the company they're about, news sites or blogs covering non-sensitive subjects, etc. This type of website usually doesn't need any of HTTPS's security properties. As long as you don't log in, the only thing HTTPS would achieve is hiding the names of the pages you're reading from whoever is listening on your connection. You can easily browse such websites anonymously if you feel a need to. Of course, this only holds as long as you don't log in.

- Publicly-verifiable information can benefit from confidentiality (so that network snoopers can't know what you are looking up), but not necessarily from integrity or authentication since the information you are getting can be verified in other ways. This includes Usenet/mailing list archives (subscribe to the mailing list if you want to verify that the web archives match the real content), public governmental and court records (go get the paper records if you want to verify them), etc. In these cases, integrity and authentication would only be a convenience, not a necessity.

- Passive content, such as images present on every webpage of a website (logo, header, footer, favicon, etc) doesn't need HTTPS. Having those served over HTTP is not a big deal, as long as the referer header doesn't leak (which is the case if the originating webpage is served over HTTPS). On the other hand, passive content present on a subset of a website's pages should be served over HTTPS in order to avoid leaking information about which page a user is viewing.

- Active mixed content that is present on every webpage of a website doesn't need confidentiality; it only needs integrity and authentication. Indeed, any network snooper knows you're browsing

cool-web-two-point-oh-website.tld, and knows that all webpages oncool-web-two-point-oh-website.tldcontain a<script>element which loads jQuery. Thus, the request for the JavaScript file containing jQuery doesn't need to be confidential. It does, however, need to have authentication and integrity so that it isn't possible to modify the JavaScript code in transit.

DomainKeys for HTTP?

What can we do about this? Well, a reasonable idea for the last item would be to extend DomainKeys to web content. DomainKeys is a system for email authentication (DKIM); it ensures that an email message was sent from a mail server that should have sent it. It works by adding a public key in a DNS record. When the DKIM-enabled mail server sends a message, it cryptographically signs some of the outgoing email's headers and the email's body using the private key corresponding to the key published in the DNS record. When a mail server receives this signed email, it grabs the public key of the sending domain from DNS, and verifies the signature against it. If the signature is valid, then the email is guaranteed to have come from the originating domain. (At least, as long as DNS itself can be trusted; yet another topic for a future post, perhaps?)

We could easily extend this concept to HTTP. A web server could cryptographically sign the response it is serving, and put this signature in an HTTP header of the response. The HTTP exchange would look like this:

Request:

GET /js/jquery.js HTTP/1.1

Host: legit-website.tld

The browser may initiate a DNS request for the public key of legit-website.tld in parallel with this request. This only needs to be done once per domain. The public key could also come from the SSL certificate of the originating webpage, in which case there is no need for any extra request.

Response:

HTTP/1.1 200 OK

Content-Type: application/javascript

Content-Length: 1337

X-DomainKeys-Signature: v=1; a=rsa-sha512; signature=(signature goes here)

/*! jQuery v1.10.1 | (c) 2005, 2013 jQuery Foundation, Inc. | jquery.org/license

//@ sourceMappingURL=jquery-1.10.1.min.map

*/

(function(e,t) {

... jQuery code continues ...

The browser can then check the signature (contained in the X-DomainKeys-Signature header) against the public key obtained from DNS. If the signature is valid, then the JavaScript code is considered valid and is interpreted. If not, then the browser considers the request to have failed, and the JavaScript code is discarded. The signature should cover the body, but also the URL, the content-type, and perhaps also contain a date range for validity, in order to prevent signatures to be used for other resources than the ones they were meant for, and to invalidate them after a period of time.

This scheme could work for any HTTP content, mixed or not. It effectively moves the integrity and authentication properties into the HTTP protocol itself, rather than having it be done at the TLS level. It has no latency penalty and very little computational overhead, because the signature can be computed ahead of time and stored on disk (or in memory), only needing recomputation when the resource's body changes or when the signature expires.

Unfortunately, deploying this scheme would require modifying existing websites, existing web servers, existing HTTP libraries, and existing browsers. Is it really worth it, when we already have a solution which provides the security properties we are after?

The case for HTTPS Everywhere

Any scheme which tries to segment a website's resources according to their desired security properties is bound to fail. Developers already have a hard time deploying HTTPS correctly, if at all; it is not a good idea to rely on individual websites to properly deploy this scheme everywhere, in such a way that all HTTP resources are signed when needed.

It doesn't make sense from the browser's perspective either: When stumbling upon an HTTP-served resource inside an HTTPS webpage, the browser cannot guess whether or not this was intentional on part of the developers (such that loading this resource over HTTP will not cause any privacy or security issues), or if it was just an oversight. As the second possibility is overwhelmingly more likely than the first, it is simply not advisable for browsers to choose to load mixed content by default. This could be worked around by adding an indication for the browser on the secure webpage, such as:

<script type="text/javascript" src="http-yes-really-i-know-this-is-mixed://legit-website.tld/js/jquery.js"></script>

... but that is clearly a hack. It still requires developers to carefully develop their applications and consider where information could leak, as opposed to simply boiling the sea and encrypting all the things. It may not be as efficient as it can be, but it's simple and does the job. This type of solution usually wins out, through the Worse Is Better principle, at least until they are not good enough anymore. HTTPS itself probably suffered from this as well; it wasn't until recently that its popularity has risen significantly. The web needed wake-up calls such as Firesheep, sslstrip, Narus devices, and other large-scale traffic snooping operations which prompted large targets such as Gmail, Twitter or Facebook to turn on HTTPS by default for all users.

Ultimately, HTTPS provides the security properties necessary for everything at a reasonable cost. It's not the ideal solution, but it is a solution, and it is the best we have that is widely available right now.

Enter HTTPS Everywhere by the Electronic Frontier Foundation. It is a browser extension which automatically switches HTTP requests to HTTPS for sites which support it. Effectively, it acts much as the way your browser would on sites which have a permanent HSTS header. As a result, it addresses a lot of the practical shortcomings of HTTPS:

- Sites which support HTTPS but don't have an HSTS header now effectively have one. You now browse websites over HTTPS whenever it's possible to do so.

- HTTP Sites which contain resources from domains that support HTTPS are downloaded over HTTPS, which avoids leaking your referer to these domains and avoids these resources being MITM. This is a bigger deal than it appears; for example, a lot of websites include most of their JavaScript libraries from Google's CDN, rather than hosting a local copy of their own. As Google's CDN supports HTTPS, a lot of websites now have part of their JavaScript served securely.

- HTTPS websites that erroneously refer to HTTP resources (and would thus cause a "mixed content" error) now work correctly.

- When visiting a site that doesn't use HSTS headers (which is the vast majority of websites), you are not vulnerable to SSL stripping attacks anymore.

- When visiting a site that does use HSTS headers, you are not vulnerable to SSL stripping attacks when you visit it for the first time, or when its HSTS header expires.

- Typing a domain name in your browser's address bar goes to the HTTPS version of that website, rather than defaulting to HTTP.

The first half of this list is about fixing common problems resulting from poor HTTPS implementations on websites. The second half is about making the browser behaving in a more secure-by-default way.

Conclusion

Here's the takeaway in bullet-point form:

- HTTPS is far from perfect, but it's the best available solution there is right now which covers most use cases.

- Not everything needs HTTPS, but the (much) bigger problem is HTTPS not being used where it should be.

- Browsers are moving in the right direction, but slowly.

- We can't rely on all websites to implement HTTPS properly on their end.

- Right now, a good way to partially address the problem is to use HTTPS Everywhere.

Etienne Perot

Etienne Perot

Comments on "The web doesn't need HTTPS everywhere, but it does need HTTPS Everywhere" (3)

#1 — by Anonymous

Good article, however I have a little correction for you here:

There is already something like a "http-yes-really-i-know-this-is-mixed://"-scheme which is widly supported (even IE6) and doesn't look like a hack: "//"

When finding a URL like this: "//legit-website.tld/js/jquery.js", the browser will automatically use the loading documents scheme for building the absolute URL used to retrieve the resource.

This concept goes by the name "Protocol Relative URL": http://www.paulirish.com/2010/the-protocol-relative-url/ https://blog.httpwatch.com/2010/02/10/using-protocol-relative-urls-to-switch-between-http-and-https/ http://www.surfingsuccess.com/html/protocol-relative-url.html

#2 — by Etienne Perot

I am aware of protocol-relative URLs (in fact, this website used to use them extensively); however, that's not the same as the proposed

http-yes-really-i-know-this-is-mixed://:http-yes-really-i-know-this-is-mixed://only works on HTTPS webpages, and requests the resource over HTTP (hopefully making sure it contains a cryptographic signature in the headers of the response).From a network perspective, it would be equivalent to embedding an HTTP resource in an HTTPS webpage (i.e. causing a mixed content "error" on purpose). From a browser perspective, it is a way for it to know that the web developer behind the website explicitly wants the resource to be loaded over HTTP and not HTTPS, and thereby not have it considered a mixed content error by the browser. Protocol-relative URLs are a way for a web developer to not care whether or not the resource is loaded over HTTP or HTTPS, as long as it doesn't cause a mixed content error. That means the resource would go through HTTPS (along with the overhead that goes with it) if it were embedded on an HTTPS webpage.

#3 — by badon

The Perspectives add-on for Firefox solves most of the problems with using HTTPS. For example, the Perspectives "notaries" can tell you if a self-signed certificate is legitimately from the site you are intending to reach. Without Perspectives, that would be difficult or impossible. Even better, if Perspectives has high confidence that the certificate is legitimate, it can automatically bypass the warning messages about it, which makes the operation painlessly automatic. It's advanced certificate validation that's also so easy a Caveman Grandma can do it.